Research

With the growing deployment of vehicular communication technologies (e.g., V2X and 5G cellular), vehicles and infrastructure are becoming connected to each other and to the cloud. This connectivity enables a fundamental shift: rather than confining 3D data operations to individual vehicles or sensors, 3D data can now be streamed, aggregated, interpreted, and delivered across the network. This shift introduces new opportunities but also new challenges: not just how to sense or store 3D data, but how to operate and manipulate it effectively in a cloud-connected vehicular ecosystem.

My research explores how 3D data can be elevated to a shared and networked resource that is collaboratively sensed, processed, and delivered across vehicles, infrastructure, and cloud systems:

- [Reconstruction] How to collect and reconstruct 3D traffic scenes by aggregating data from distributed sensors

- [Interpretation] How to extract semantic understanding from the reconstructed scene in the cloud in real-time

- [Delivery] How to deliver rich 3D content from the cloud to in-vehicle augmented reality (AR) displays to enable AR experiences

Ultimately, my research envisions a future where 3D data is no longer treated merely as a local sensing artifact, but as a shared medium that enables collaborative perception, coordinated decision making, and immersive vehicular experiences.

Reconstruction

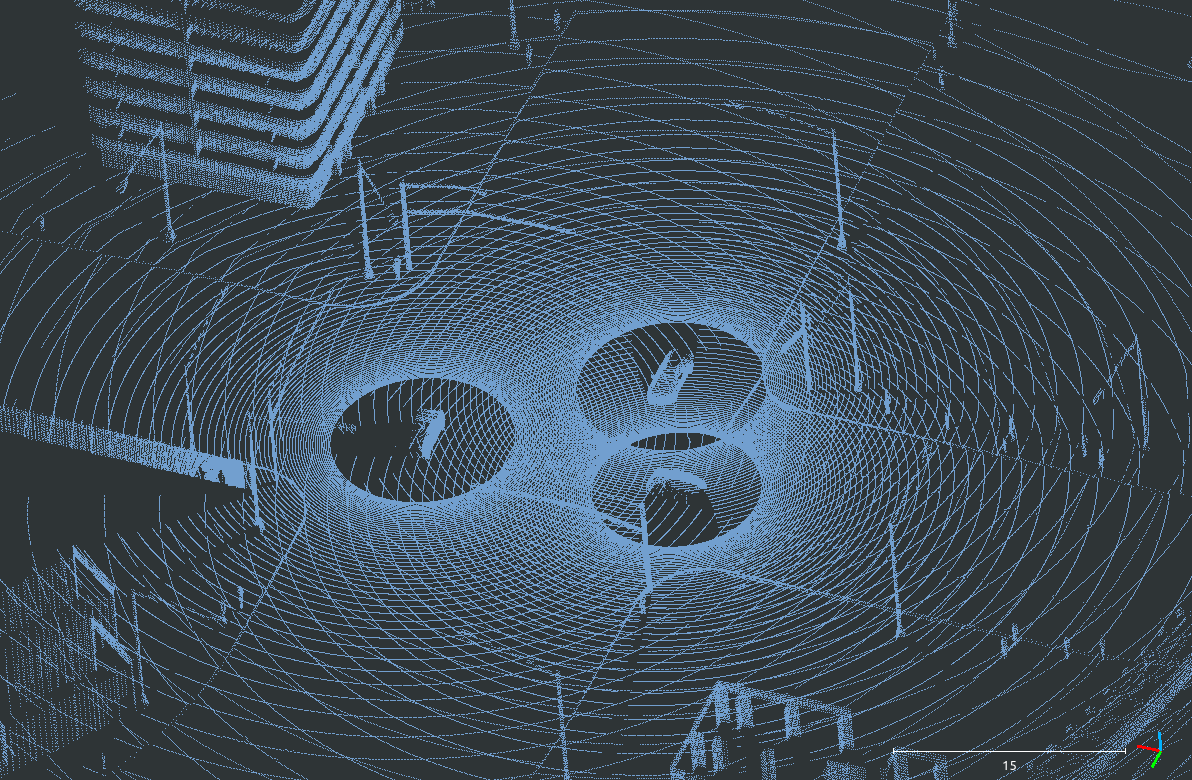

RECAP: 3D Traffic Reconstruction

The core challenge in RECAP lies in accurately and quickly fusing large volumes of 3D data from dynamic, sparsely overlapping views across space and time. Building upon prior work on 3D view fusion, RECAP introduces techniques that minimize reconstruction error and computational cost in these highly asynchronous observation scenarios.

Interpretation

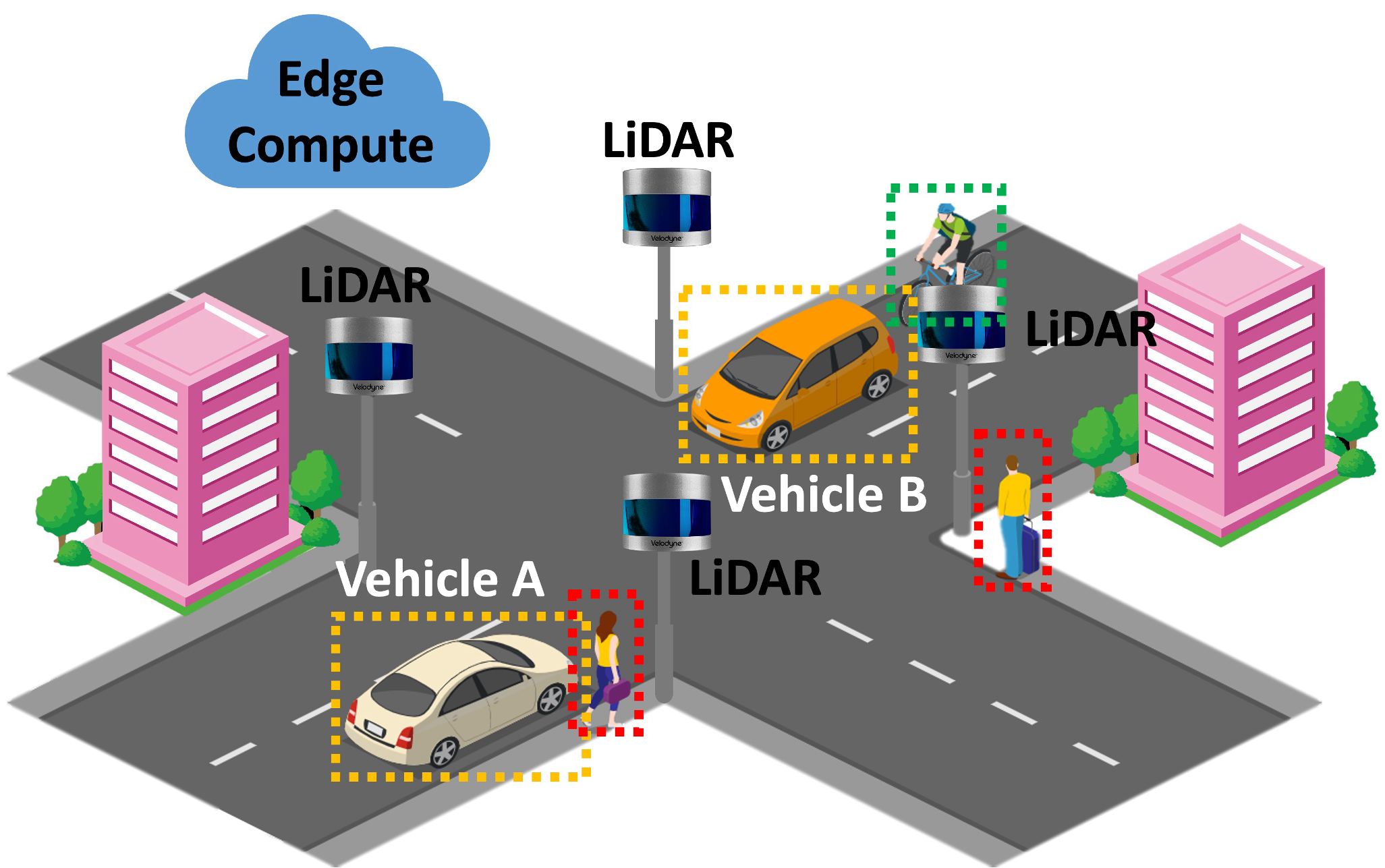

Cooperative Infrastructure Perception

To generate perception outputs fast enough for autonomous driving use cases, CIP must process large scale 3D scenes within 100 ms while achieving accuracy that match or exceed state-of-the-art vision algorithms. To meet this requirement, CIP introduces a novel alignment algorithm for accurate view fusion, along with efficient implementations of key perception tasks including dynamic object extraction, tracking, and motion estimation.

Delivery

3D Video Delivery to in-vehicle AR Display (under submission)While RECAP and CIP demonstrate how vehicles and infrastructure can collaboratively sense and interpret the traffic environment, this work extends this vision by exploring how 3D data can also serve human-facing applications like in-vehicle infotainment, navigation assistance, and spatially aware content delivery.

This work is motivated by growing availability of augmented rendering devices or augmented reality headup displays within modern vehicles. It opens the door for streaming 3D video as a spatially immersive medium that offers more expressive visual experiences to passengers in vehicles.

Delivering such content in a mobile vehicular context introduces new technical challenges:

- Bandwidth constraints must be addressed to support the large size and complexity of 3D video streams especially under highly dynamic network conditions.

- Adaptation to vehicle motion is required to maintain spatial coherence and rendering accuracy during playback accounting for changes in position and speed.